Thanks for the friendly words! Highly appreciated.

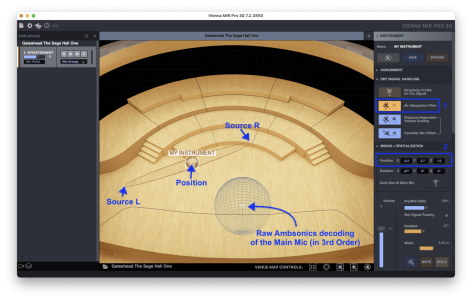

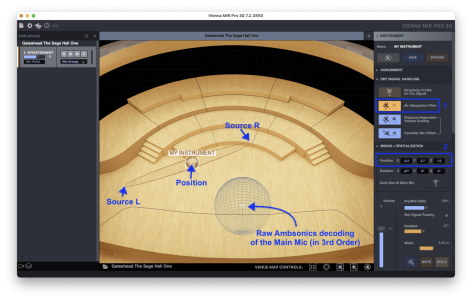

This screenshot illustrates my answers below:

When using Dry mode, how does MIR 3D position an instrument?

The dry signal is encoded in Ambisonics and takes the place at the exact x/y/z coordinates where the impulse source was located during the recording session in a MIR Venue. (... see #2 in the screenshot above). It gets decoded together with the wet signal as if it had been there from the beginning, but without the artefacts that the direct signal components typically exhibit after the convolution process.

For example, does it include the Haas effect?

No, not at all. Ambisonics is a coincident format.

BTW: The Haas effect is quite problematic when a signal is folded down to a narrower format, e.g. from stereo to mono.

Does it pan the source audio from left and right?

Errr ... yes, of course. 8-) Just look at the MIR Icon and you will see exactly where the left and right channel will be placed in the virtual sound field. (... see screenshot)

Does it use a head-transfer function to eq the left/right channels based on the sound source position?

No, as this would work only with headphones. But you can easily add binaural effects with external tools of your choice if you set MIR 3D to output un-decoded, raw Ambisonics (up to 3rd order). (... see screenshot)

Does it EQ when incorporating the distance to the source?

Yes, you can switch on a so-called Air Absorption Filter in MIR's Dry Signal Handling. (... see #1 in the screenshot above)

I'm sure it does something very sophisticated, I'm mainly wondering how much more MIR 3D does than I would if I were trying to manually position a sound source. And again, this is before incorporating reverb.

As I wrote above: The idea is to replace the direct signal components with an "ideal" version derived from the input source. IOW: Sophisticated, yes, but completely transparent and logical, concept-wise.

You might also be interested in the little primer I wrote for legacy MIR Pro that covers many of the underlying ideas and concepts: ->

Think MIR!

Enjoy MIR 3D!

).

).