You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

DAWbench

- Thread starter Pictus

- Start date

Cinemachine

aka Peter

I don't think 'we' is accurate. Creators hit hardware, (e.g CPU) limitations, the day does come.If the project runs at 75% CPU on one computer and 25% CPU on another computer then it runs on both computers. So from the standpoint of writing/producing music there is no difference between those two computers.

That's what I'm getting at. With dawbench there's nothing being measured that has any relationship to productivity or workflow. It's a benchmarker's playground without a tie to reality.

The response to this fact is the "but one day" argument: if I lower my CPU usage then that's good because one day I'll need it. But we never get to that day.

Some creatives are proactive in hardware stepping, after all, this is what we call the 'Enthusiast' range. It's comprehendible; to perform existing tasks with a lower ceiling. This has it's benefits, whether it's to spread core calculations to manage productivity, or reduce heat / noise the other main factor is robustness and compatibility (...I hate my Threadripper! ). If your system shows no signs of labour, ever, then I agree there is no need to consider a new machine. But if a user is hitting 75% while another is hitting 25%, data can be taken from this, and one machine could be costing a lot less to cool / run on a daily basis. Pushing hardware at a high temp and operational level also takes it's toll and reduces life span on the components.

I agree that DAWbench needs to taken with a pinch of salt. It's not a project identical to our own use, and therefore it could be superfluous in comparison to 'real-world' production.

I think this is the same discussion, about buying a system with bigger spec than what is required at a current level is sensible for any form of media creation. I imagine most creators buy bigger spec that what is currently required, to future proof for creative requirements. In the GPU market, anyone that invested in an RTX card gain big benefits, and I don't mean things like real-time game ray-tracing in unreal. Adobe and (broken link removed) have AI for RTX cards that significantly boost productivity in 3D. But, nobody bought these RTX cards for these exact tools. The hardware enables the production of software.You do you, but that’s like buying a gallon container to hold a pint of water instead of just buying a pint container.

The day does come. We have threads about user hardware issues, whether it's to setup VEPro or to simply overclock a machine for better performance. But more appropriately also on posts from users unable to handle new libraries, or they state concerns or problems about CPU and Gigs of RAM eaten on Spitfire and Sine players. I wouldn't suggesting being an early adopter of hardware, but if you have the headroom then you are less likely to face these problems keeping your attention where it should be, on the audio.

The developer isn't always going to trim it down, if we want bigger and better it comes at a cost...(BBC Pro is like 600gb?), more samples, more code, more RAM, more CPU usage. We have the data to see where the demand is, our DAWs show it. To come back to both your comments, futureproofing beyond existing requirements is warranted, as it's informed by our own DAW benchmarks. When you buy, you want it to last for as long as possible.

Last edited:

Cinemachine

aka Peter

Don't forget large quantities of snorted caffeine!I also think Dawbench is useless... it has nothing to do with real life experience.

It lacks basic metrics like Talent and Vitamin D Deficiency, that's what makes a composer.

thevisi0nary

Senior Member

I agree with this I just don’t think that’s what they were implyingI think this is the same discussion, about buying a system with bigger spec than what is required at a current level is sensible for any form of media creation. I imagine most creators buy bigger spec that what is currently required, to future proof for creative requirements.

Hendrixon

Senior New

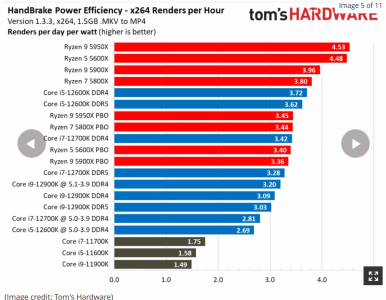

CAS 38 to CAS 40 that's all it takes to wipe off the 12900k gains?!

My feeling is that something is not right in the initial benchmarks...

The VI scores of the Intel 12 chips seemed too out of control as buffer size grew, the numbers don't follow any gradual progress.

I don't know...

G-Skill and Corsair is what I usually get, the latter being my preferred choice. DDR5 is here and in the near future that will be the only choice for a new "high-end" build. Buying DDR4 now but upgrade the processor and motherboard in the near future and that DDR4 may not be compatible. AM5, with socket changing to "Land Grid Array" due out by June 2022 see - Link - will be jumping on the DDR5 wagon. What next? Perhaps by 2025, there might be integrated blazing fast memory and graphics similar to ARM architecture.... Apple, Jeff Wilcox, director, engineer responsible for Arm and M1 will be joining Intel. Source: Link1 and Link2

.

Where can I find out about a June release for AM5?...AM5, with socket changing to "Land Grid Array" due out by June 2022 see - Link - will be jumping on the DDR5 wagon.

.

As far as I can find they've just said second half of 2022 - nothing more specific than that (and so more likely nearer the end of the year).

If it's a June release then I might just wait until then to start my new build!

Unfortunately AMD could delay, your guess is as good as mine for reasons out of my control. Perhaps tough times due to Covid or other reasons and stocks for DDR5 are limited.Where can I find out about a June release for AM5?

As far as I can find they've just said second half of 2022 - nothing more specific than that (and so more likely nearer the end of the year).

If it's a June release then I might just wait until then to start my new build!

AM5 is coming with a new socket design (Land Grid Array), PCIe 5.0 and DDR5 support.

.

Of course. But I just wondered where you got June from at all. I couldn't find rumours of a June release anywhere. Everywhere seems to say simply second half of 2022.Unfortunately AMD could delay, your guess is as good as mine for reasons out of my control. Perhaps tough times due to Covid or other reasons and stocks for DDR5 are limited.

AM5 is coming with a new socket design (Land Grid Array), PCIe 5.0 and DDR5 support.

.

I don't know either.CAS 38 to CAS 40 that's all it takes to wipe off the 12900k gains?!

My feeling is that something is not right in the initial benchmarks...

The VI scores of the Intel 12 chips seemed too out of control as buffer size grew, the numbers don't follow any gradual progress.

I don't know...

The memory controller/BIOS of the DDR5 right now is not mature.

For the DDR4 versions they are very picky, but looks like the new

BIOS makes things ok when using modern memory modules.

--------------------------------------------------

G-Skill and Corsair is what I usually get, the latter being my preferred choice.

I like Crucial more than G.Skill or Corsair.

Sorry, correct me if I am wrong: second half of 2022 is July 1st? Close enough I guess.Of course. But I just wondered where you got June from at all. I couldn't find rumours of a June release anywhere. Everywhere seems to say simply second half of 2022.

Last edited:

In quote and reference to the video you posted, wasn't that Corsair featured or did I confuse that with Crucial?I like Crucial more than G.Skill or Corsair.

No, forgive me. I misinterpreted the intent of your words.Sorry, correct me if I am wrong: second half of 2022 is July 1st? Close enough I guess.

Yeah, we'll probably be waiting a while!

I think I'll just go ahead and start plotting my February 5900x build.

TAFKAT

New Member

?CAS 38 to CAS 40 that's all it takes to wipe off the 12900k gains?!

He clearly stated there was no change in the results going from the CAS 38 to CAS 40.

Similar level of scaling can be seen in the Quad Channel X299 results , indicating the Kontakt benchmark is more responsive to memory bandwidth than memory speed/latency. Z690's new dual memory controller layout with DDR5 allows cross communication that looks to increase the bandwidth in a very beneficial way.My feeling is that something is not right in the initial benchmarks...

The VI scores of the Intel 12 chips seemed too out of control as buffer size grew, the numbers don't follow any gradual progress.

More Info : Here

Thanks to Pictus for the original heads up and link on another forum

Hendrixon

Senior New

True!?

He clearly stated there was no change in the results going from the CAS 38 to CAS 40.

How did I get it wrong?

Well in my defense I saw the vid around 4am... so I probably fell asleep when he said the new dimms are CAS40 and I woke up to see the chart of 5200 vs 5600

Vladinemir

Active Member

Stumbled across this article

Could 11th gen become possible option then if this instruction will be used more widely? Those chips haven't received much love so far.

Last edited:

I care deeply about 400 compressors. They add an enchanting je ne sais quoi, especially between 40 kHz and 96 kHz. That's why I'm only working in 192 kHz sample rate 16x oversamplingThank goodness I can finally add that 400th compressor I've been needing

No, they are old tec, produces too much heat and the AVX instructions makes things even worse.Could 11th gen become possible option then if this instruction will be used more widely? Those chips haven't receive much love so far.

AMD vs Intel: Which CPUs Are Better in 2022?

We put AMD vs Intel in a battle of processor prowess.

Share: