Ok. I'm going back to your original post.

My point of reference : We run multiple workstations (music composition and sound post) in a facility structure. So, YMMV, but I use a lot of the facility thinking within my own personal composition setup.

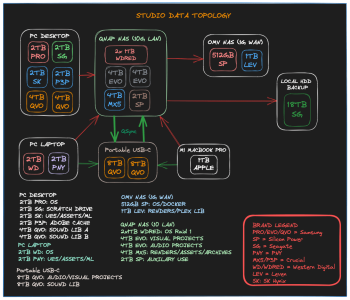

Projects first. These need the least bandwidth, and arguably are the most important part of your setup from the point of view of backups. We now run ALL projects off a 10GBE NAS. The NAS takes care of both snapshot backups (daily) as well as running a full redundant secondary NAS which mirrors both the storage pool AND the snapshot pool. Finally, snapshots are backed up to our own private cloud.

At home, I am now starting to run my projects off a similar NAS, and I back that up internally to snapshots, then mirror that all on the backup NAS at the studios, which also goes to the (private) cloud.

While I love FreeNAS/TrueNAS, we run QNAP Hero with lots of precautions to mitigate against hacks (which have been a problem for others not running firewalls / exposing their NAS to the wider web.) Our particular approach is to run PfSense on its own hardware, and for those connecting to our system to use a similar approach. Similar thinking to :

https://www.servethehome.com/inexpe...firewall-box-review-intel-j4125-i225-pfsense/

You don't need to worry about partitions etc for projects and the like. I understand the thinking behind this from 10+ years ago (and why so many folk did it) but it is likely needlessly overcomplicating things.

As for the NAS, choose a file system that you know will be able to be opened on new hardware in the future. We are using ZFS.

1GBE is completely fine (but not speedy) for a single user on a single nas. However, you will notice slower save times compared to locally. We use 10GBE (and a solid state based storage pool) and its superb for speed, and has absolutely no trouble running multiple studios with large sessions all at the same time. For home use, I'd look at inexpensive 2.5GBE.

So why do I say this? Well, a NAS is just more robust than connected storage or internal storage. Its designed so that all backups happen whatever computer is connected to it. Change your computer, you don't need to set anything up. Just connect to the network. You can then also control things like outside connection (if you want access to your data remotely etc). Management of the data is super easy, and you can also protect the data if needed (more important in a multi-user environment)

It also makes setting up redundancy within the pools simple. Redundancy doesn't equal backup. It just means you don't need to stop working when a drive fails. Zero downtime is the key when you have a delivery for a netflix show that is mixing tomorow!

Then samples.

I've written on here multiple times that I use single large NVME drives these days. PCIE Gen3 8TB drives are awesome for samples. I use an external thunderbolt system, but internal is cool too. The way I see it is - sure, I'd like more space, but this is enough for the next few years, and by then I'll be able to get a single 16TB drive. Indeed, I have a single 15TB U.2 drive sitting next to me right now for some other tests which would actually make an AMAZING sample drive with the right setup. Way too expensive for most composition purposes (but worth it for other data purposes)

And if you want to gradually expand a single sample volume (which I recommend rather than spreading samples over multiple drives etc), then look into disk spanning / amalgamation software for sure. Some of it is amazing. Look into things that use file systems that are modern AND well supported.

These days, while it is a PAIN to rebuild sample libraries from scratch, it also isn't nearly as hard as it used to be. Indeed, I'm about to embark on doing exactly that for my Mac Studio system. With Kontakt 7, I'm going to organise things differently to how I've done it in the past. Thats just my OCD kicking in, and completely unnecessary, and more a symptom of not having ideas for a theatre score that I really need to get moving on....

Modern SSD's - be they SATAIII, NVME, U.2 or the like are all amazing for samples. I doubt anyone would see any sort of bottle neck even running a SATA III based SSD system. But if you go to NVME, I don't see a time in the next little while where any new advances (other than storage capacity on single drives) which will mean you need to change your system / upgrade it.

I have run sessions with 1000's of voices at the same time. We run internal tests for qualifying systems in our studios - using our own custom kontakt sample libs. We have never got close to the limits of NVME drives under ANY stress test. All the bottle necks are way back down the line with regards to single core (zero core) needs of real time audio systems. Old film mixes used to run off multiple computers. Many places still do that. However, even the largest hollywood film could stream off a single drive no stress these days. Most have moved to NAS based storage which is only 10GBE. Thats not as fast as ANY NVME (and caps out at about 2 parallel SATA III SSD's)

Backup for samples doesn't need to be as robust as projects, as most of the time its just a matter of waiting for new downloads. I keep archives of all downloads here locally on the network to speed things up, but I don't (rightly or wrongly) keep a full backup of my sample drive any longer I have 2 complete computer systems I compose from, each with their own sample drive. Laptop has 8TB internal NVME and Mac Studio uses the 8TB NVME drive in an enclosure. I just carry the external NVME drive with me as a backup when travelling with the laptop. This backup does NOT work for some non-kontakt libs though. Thus having archives of the installers close enough (and I can access them remotely if for some reason I can't re-download from a sample company! Would I like a better solution? Sure! But the complexity is already enough, and I've weighed up the risks)

Anyway. There's my 2c. I'm getting back to building my new sample drive and hopefully making some noise.